February 8th, 2026

The Safe AI Writing Playbook for SaaS Content Teams (EEAT & Oversight)

WD

WDWarren Day

Your CEO just mandated a 300% increase in blog output using AI. Your stomach drops.

You've seen the horror stories: leaked product roadmaps in ChatGPT, factual errors published by competitors, and Google's relentless focus on EEAT. The pressure to find the best AI SEO tools is immense, but the real question isn't which button to push. It's how to build guardrails so you can push it safely.

Here's the truth most listicles won't tell you: the tool itself matters far less than the system you wrap around it. You can hand the same AI model to two content teams and get radically different outcomes. One ships fast, ranks well, and sleeps soundly. The other publishes hallucinations, triggers a data breach investigation, and watches organic traffic crater when Google decides their content lacks expertise signals. The difference? The first team built a governed workflow. The second one bought software and hoped for the best.

For SaaS content leaders, the best AI SEO tool isn't a single application. It's a secure, governed system that combines the right models, structured prompts, human verification, and audit trails. That's what enables scale without sacrificing accuracy, security, or search credibility.

This playbook gives you that system in four parts. You'll learn how to establish an AI Content Safety Council that defines accountability before the first prompt runs. You'll get fact-first prompting frameworks that cut hallucination rates in half. You'll see the exact human-in-the-loop workflow that 97% of high-performing teams use before hitting publish. And you'll evaluate tools through a security lens that protects your data, your brand, and your job.

No hype. No theory. Just the operational framework you can implement in the next 30 days to scale content with confidence instead of fear.

Why 'Best' No Longer Means 'Most Features': The New AI SEO Mandate

Search for "best AI SEO tools" and you'll drown in listicles comparing feature counts and pricing grids. None of them address the actual problem.

76% of SaaS companies already use AI in their products. Your content team is next, whether you're ready or not. But here's what those roundup posts won't tell you: the tool with the longest feature list is often the one that gets you fired.

Your leadership wants scale. You need to deliver it without publishing hallucinated statistics, accidentally leaking customer data into OpenAI's servers, or watching your rankings collapse because Google flags your output as AI slop.

The real evaluation framework ignores keyword density scores and content optimization widgets entirely. Every tool, every workflow, needs to pass three filters that actually matter:

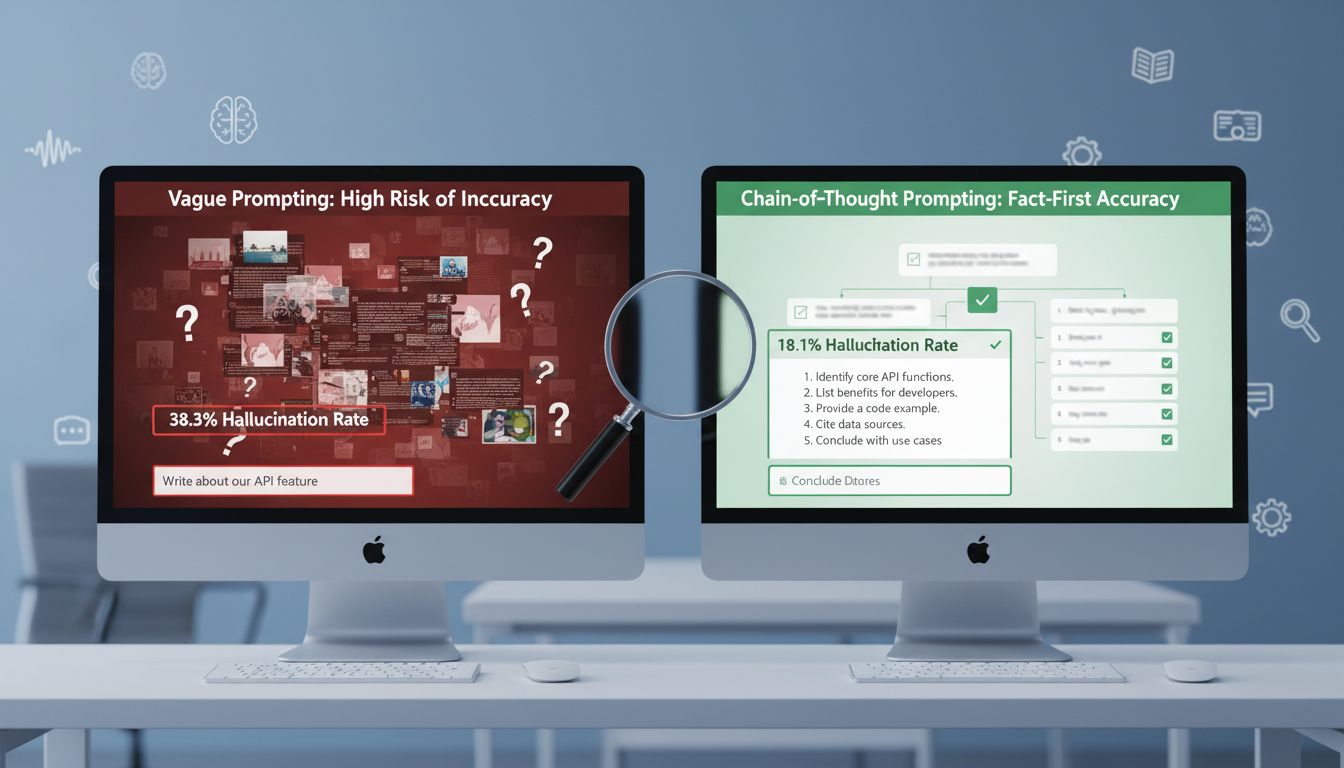

Accuracy: Can you verify the AI isn't making things up? Vague prompts push hallucination rates to 38.3% across major models. One fabricated claim about competitor pricing or a misquoted regulation costs you a customer and triggers a legal review.

Security: Where does your input data actually go? 10% of enterprise prompts contain sensitive corporate information. When your writers paste customer case studies or internal metrics into ChatGPT to "polish the draft," you've just fed your roadmap into OpenAI's training pipeline.

Credibility: Will Google trust it? Search algorithms now prioritize Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T). AI content without clear human authorship, expert review, and verifiable sources gets buried. Or penalized.

These three filters connect directly to what your executives actually measure: brand reputation, organic traffic, compliance risk. Get them wrong and no AI keyword generator or optimization score will save you from the fallout.

The Hidden Costs of Unchecked AI: Beyond the Brand Blunder

Most content leaders fixate on the obvious risk—publishing a factual howler that goes viral on LinkedIn. That's embarrassing, but it's not the most expensive failure mode.

The real costs are quieter and compound over time.

Data leakage happens before you hit publish. 10% of enterprise prompts submitted to generative AI tools contain sensitive corporate data. Your writer pastes a customer case study draft into ChatGPT to "tighten the intro." That draft includes unannounced feature names, revenue figures, and a customer's internal project codename. Now the LLM provider—often buried as a data subprocessor in your AI tool's terms—has that data sitting in their training pipeline or logs.

You won't know until a security audit surfaces it. Or worse, until a competitor's content starts echoing your roadmap language. Platforms like Reco and Lasso Security exist precisely because this risk is measurable and growing.

Hallucinations aren't random. Research shows that vague prompts yield a hallucination rate of 38.3% across evaluated large language models, while Chain-of-Thought prompts reduce that rate to 18.1%. That's a 2x difference driven entirely by how you structure your input.

When you publish a false statistic or fabricated case study, you're not just correcting a blog post. You're fielding support tickets, apologizing to prospects, retraining your sales team, and watching your domain authority erode as backlinks get pulled. The "hallucination tax" isn't hypothetical. It's measured in lost deals and damaged trust.

Compliance and security tooling aren't optional anymore. Compliance budgets are surging as AI adoption accelerates. For many firms, compliance overhead now matches compute costs. You'll need monitoring, audit trails, data classification, and potentially dedicated AI security platforms. That's headcount, software spend, and operational drag you didn't budget for.

Google doesn't penalize AI content—it demotes shallow content. The search engine's position is clear: AI-generated material is fine if it's useful, original, and demonstrates expertise. But 97% of companies edit and review AI-generated content before publication precisely because raw AI output rarely satisfies E-E-A-T signals on its own.

Skip the human verification layer, and your content won't get penalized. It'll just be invisible.

These aren't edge cases. They're what happens when you treat AI tools as a shortcut instead of a system.

Part 1: The Governance Foundation – Building Your AI Content Safety Council

You can't govern what nobody owns. That's the first problem most content teams hit when leadership says "use AI responsibly."

Eighty-seven percent of executives claim they have AI governance frameworks in place. Fewer than 25% have actually operationalized them Source. The difference? A policy document gathering dust in a shared drive versus a living system that prevents your team from publishing hallucinations or leaking your product roadmap.

Here's how to close that gap in the next two weeks.

Form a cross-functional AI working group

Your AI content governance can't live solely in Marketing.

Schedule a 90-minute kickoff meeting with representatives from Content, Legal/Compliance, IT/Security, and Product Marketing. Make attendance mandatory. Set a recurring quarterly review.

This isn't a committee designed to slow you down. It's the group that ensures oversight and accountability across departments so you don't discover a compliance issue three months after a writer pastes customer data into ChatGPT. Each function brings a different lens: Legal spots regulatory landmines, Security identifies data leakage vectors, Product Marketing protects competitive positioning, and Content owns the workflow. You need all four perspectives in the room, or you're governing blind.

Draft your one-page AI content policy

Forget the 40-page handbook. You need a single-page document your team will actually read and reference.

Structure it around four sections:

Permitted use cases: Outlining, first drafts, headline variations, meta descriptions.

Banned inputs: Customer PII, unreleased product details, proprietary code, financial projections, anything marked confidential.

Mandatory review steps: All AI-generated content must be fact-checked by a human editor; claims require internal evidence or citation.

Attribution guidelines: When and how to disclose AI assistance (e.g., "Research assisted by AI tools; verified by [Author Name], [Title]").

Post this policy in your content hub, Slack, and onboarding docs. Make it impossible to miss.

Define accountability with a RACI matrix

Vague responsibility is no responsibility.

Build a simple RACI chart (Responsible, Accountable, Consulted, Informed) for each stage of your AI content workflow: Prompting, Drafting, Review, Approval, Publication.

Example: The writer is Responsible for the prompt and draft. The Content Lead is Accountable for final approval. Legal is Consulted on claims involving compliance or regulations. IT is Informed when new tools are added.

Humans remain fully accountable for AI outputs, not the model. This matrix makes that accountability explicit and enforceable. Without it, everyone assumes someone else is checking the facts, and nobody actually does.

A policy in a drawer is theater. The next three sections show you how to operationalize it, starting with the prompts themselves.

Part 2: Fact-First Prompting – Engineering Your Inputs for Accurate Outputs

Your governance council is in place. Now you need to fix the prompts that feed your AI workflow because the difference between a hallucination-prone draft and a fact-checkable one isn't the model. It's the instruction.

Research from Frontiers in Artificial Intelligence is clear on this: vague prompts yield a hallucination rate of 38.3%, while Chain-of-Thought (CoT) prompts reduce that to 18.1%. That's a 53% reduction in factual errors from better instructions alone. Not marginal. This is the difference between publishing three corrections per week and one per month.

The anatomy of a vague prompt (the kind that creates risk):

"Write a blog post about our new API security feature for enterprise customers."

That's it. No context, no facts, no constraints. The model will invent benefits, cite nonexistent case studies, and confidently describe capabilities your product doesn't have.

The same request, structured as a Chain-of-Thought prompt:

"You are writing a product announcement blog post for IT security leaders at mid-market SaaS companies.

Step 1: Explain the problem: API keys stored in code repositories are the #1 cause of data breaches in SaaS (cite our 2024 Security Report).

Step 2: Describe how our new token rotation feature solves it: automated 24-hour key expiry + Slack alerts.

Step 3: Provide one customer proof point: [Customer Name] reduced security incidents by 60% in 90 days (link to case study).

Step 4: Close with a specific CTA: Book a 15-minute demo with our security team.Do not make claims about compliance certifications, integrations, or pricing unless I provide them. If you lack information to complete a step, flag it with [NEEDS VERIFICATION]."

The second prompt doesn't just ask for output. It scaffolds reasoning, provides source material, and sets refusal rules.

How to stop AI from making up facts

- Use Chain-of-Thought reasoning. Break complex requests into sequential steps ("First explain X, then Y, then Z").

- Provide source documents in your prompt. Paste URLs, approved messaging docs, or data points directly into the context window.

- Set model temperature to 0.3–0.5. Lower temperature settings reduce randomness and improve factual consistency for non-creative tasks.

- Use few-shot examples. Show the model 2–3 examples of correctly formatted, fact-checked output before asking for new content.

- Implement a human verification step. No prompt is perfect. Treat AI output as a first draft that requires editorial review and fact-checking against your internal sources.

Beyond prompting: RAG and verification frameworks

For high-stakes content like whitepapers, compliance docs, or customer-facing claims, Retrieval-Augmented Generation (RAG) goes further. The model queries your approved knowledge base during generation, grounding every claim in a verified source document. Frameworks like EVER add real-time hallucination detection and correction.

Most teams won't need RAG on day one. But if you're publishing 50+ pieces per month or operating in regulated verticals, the investment in structured retrieval pays for itself in risk reduction.

The prompt is your first line of defense. Get it right, and your editors spend time refining tone, not rewriting fiction.

Part 3: The Governed AI Content Workflow – A Visual Blueprint

Your prompts are tighter. Your council knows who owns what. Now you need a repeatable workflow that connects them—one that turns good intentions into production reality.

Most teams treat AI content like a black box: brief goes in, draft comes out, someone cleans it up. That's not governance. That's hope with extra steps.

Here's the gate-based workflow that 97% of companies already use in some form, made explicit and auditable:

graph TD

A[Step 1: Brief & Fact Pack Assembly] --> B[Step 2: AI Draft Generation]

B --> C[Step 3: Mandatory Expert Review]

C --> D[Step 4: E-E-A-T Enhancement]

D --> E[Step 5: Final Compliance & Security Check]

E --> F[Publish]

C -->|Fails fact-check| B

E -->|Fails security scan| C

Step 1: Brief & Fact Pack Assembly

Your content strategist creates the brief and gathers the fact pack—product docs, customer data, internal benchmarks, competitor research. This is the raw material that prevents hallucination. The strategist owns this gate. Nothing moves forward without verified inputs.

Step 2: AI Draft Generation

Using the fact-first prompts from Part 2, the model generates a structured draft. Temperature set to 0.3–0.5. Chain-of-Thought reasoning required. Citations mandatory.

The output is a starting point, not a finished asset.

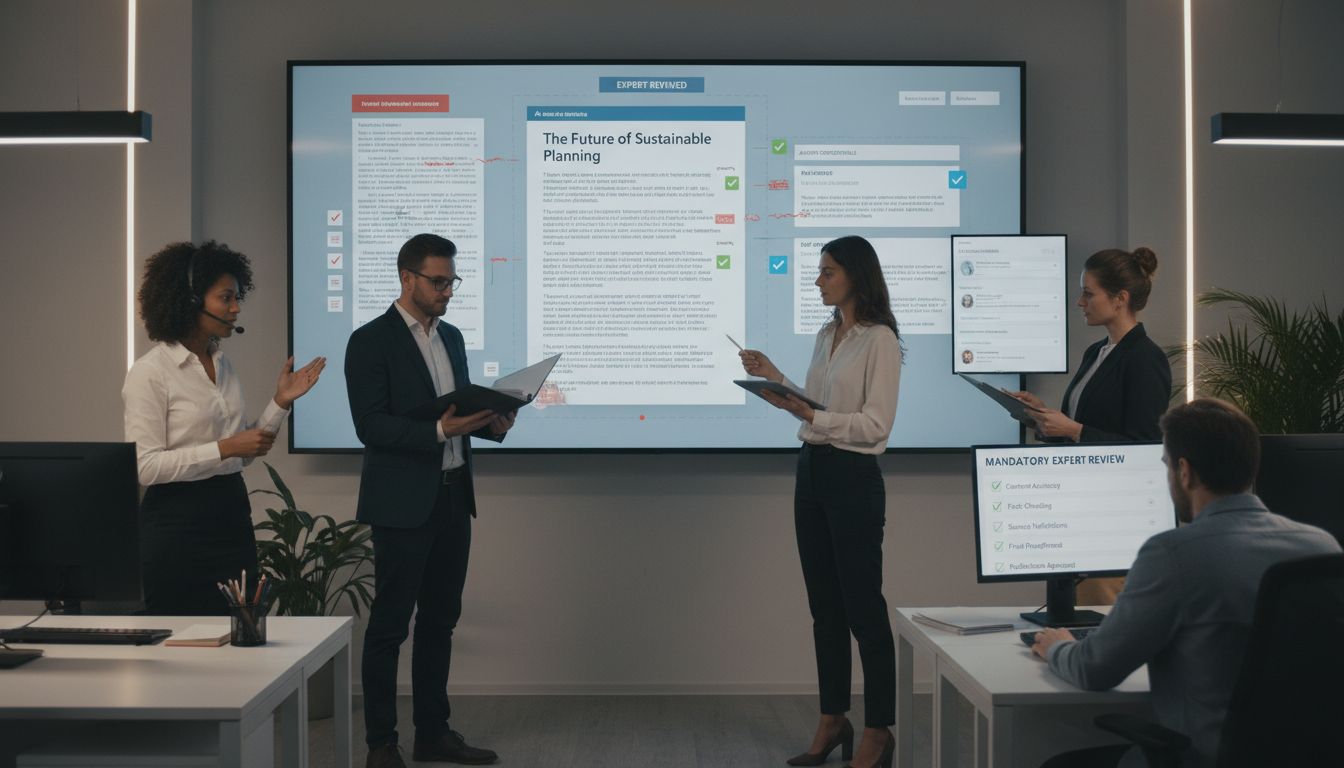

Step 3: Mandatory Expert Review (The Human Firewall)

This is where human accountability becomes non-negotiable. Your subject-matter expert—product marketer, engineer, or domain specialist—fact-checks every claim, adds analysis the AI can't provide, and layers in brand voice. Their name goes on the piece. If they wouldn't stake their reputation on it, it doesn't pass.

Step 4: E-E-A-T Enhancement

Add the author byline with credentials. Link to proprietary data, case studies, or original research. Make sure the piece offers a perspective only your team can provide—not generic advice ChatGPT could write for anyone. This step is what separates content that ranks from content that gets cited.

Step 5: Final Compliance & Security Check

Run the draft through Copyscape or Quetext to catch unintentional plagiarism. Verify no sensitive data leaked into prompts or outputs. The compliance owner from your RACI matrix signs off.

Only then does it publish.

Each gate has an owner. Each rejection loops back to the previous step with documented reasons. No shortcuts, no "just this once."

This isn't slower. It's defensible.

Part 4: Evaluating 'Best AI SEO Tools' Through a Security Lens

Now that you have a workflow, you need tools that fit inside it—not tools that blow it apart.

Most "best AI SEO tools" comparisons rank features: keyword volume, content templates, SERP tracking. None of them ask whether the vendor will sign a Data Processing Agreement, or whether your customer list gets fed to OpenAI as a subprocessor.

That's the gap this section closes.

The Security-First Evaluation Framework

Before you compare features, compare these four dimensions:

| Dimension | What to Evaluate | Why It Matters |

|---|---|---|

| Core SEO Features | Keyword research, content briefs, on-page optimization, rank tracking | Does it solve your actual bottleneck? |

| Security & Compliance | DPAs, SOC 2, ISO 27001, GDPR/HIPAA readiness, subprocessor disclosure | Can you defend it in an audit? |

| Integration & Auditability | API access, prompt logging, output versioning, CMS compatibility | Can you trace what was generated and by whom? |

| Cost Structure | Per-seat, per-token, flat-rate; hidden overage fees | Does pricing scale with your workflow or punish it? |

If a tool scores high on features but fails security, it's not on your shortlist.

Mandatory Vendor Questions

Ask every vendor—whether it's a platform like Semrush AI SEO or a standalone AI writer—these five questions:

- Do you sign Data Processing Agreements (DPAs)? If they hesitate, walk away.

- Who are your subprocessors? Many SaaS tools list OpenAI, Anthropic, or Google as data subprocessors. You need to know where your prompts and outputs travel.

- What certifications do you hold? OpenAI offers GDPR, CCPA, and ISO 27001; Anthropic has SOC 2 and HIPAA; Google Gemini has SOC 1/2/3 and ISO 27001/27017/27018. Match certifications to your industry requirements.

- Do you offer on-premise or private cloud deployment? For regulated industries or proprietary data, this is non-negotiable.

- Can we audit prompt and output logs? If you can't trace what was generated, you can't prove compliance.

Category Analysis: Where Each Tool Type Fits

All-in-One SEO Suites (e.g., Semrush, Ahrefs with AI features): These integrate keyword research, competitive analysis, and AI drafting in one platform. The advantage is consolidated data. The risk is that you're locked into their AI provider and their terms. Check whether they allow you to bring your own model or enforce your own prompting rules.

Specialist AI Writers (e.g., Jasper, Copy.ai): Built for speed and templates.

Great for high-volume, low-risk content (social posts, ad copy). Terrible for thought leadership or compliance-sensitive topics unless you layer in human review and fact-checking.

Open-Source/On-Prem Models (e.g., Llama): Maximum control, zero third-party subprocessor risk. You own the prompts, the outputs, and the infrastructure. The trade-off is setup complexity and the need for in-house ML/DevOps support.

Security Layers (e.g., Guardrails AI): Not content tools. These are runtime safety checks that sit between your prompts and the model. They block PII, enforce output schemas, and log violations. Guardrails AI has 5.9k GitHub stars and enterprise customers like Robinhood.

The 'Free AI SEO Tools' Trap

Free tiers are tempting. They're also dangerous.

Most free AI SEO tools—whether it's a free AI keyword generator or a freemium content assistant—don't sign DPAs. 10% of enterprise prompts contain sensitive corporate data, and free tools have zero obligation to protect it. Your competitor analysis, your product roadmap, your customer pain points—all fair game for model training unless the terms explicitly say otherwise.

Use free tools for public research and low-stakes experimentation. Never for proprietary strategy, customer data, or anything you'd mark "confidential" in a deck.

Your 'Tool' Is Probably a Stack

Here's what most successful teams actually run:

- LLM provider (OpenAI, Anthropic, or self-hosted Llama) with a signed enterprise agreement

- Prompting interface (custom-built or a platform like Dust, Relevance AI)

- Security layer (Guardrails AI, Lasso Security, or equivalent)

- CMS integration (WordPress, Contentful, Webflow) with version control and approval workflows

An AI keyword generator isn't a standalone tool. It's one function inside this governed stack. You feed it a topic cluster, it returns seed keywords, a human validates them against search intent, and then they enter your content brief template.

Stop shopping for a single "best" tool. Start building a defensible stack.

Measuring Safe Scale: KPIs for AI-Augmented Content Operations

Your VP of Marketing wants proof that your AI governance framework isn't slowing you down. Your CEO wants to know if the investment is paying off.

Neither cares about "words produced per week."

That metric is a trap. When you optimize for volume alone, you incentivize writers to skip fact-checking, rush reviews, and publish AI drafts with minimal human oversight. You get scale, but you also get hallucinations, brand damage, and search penalties.

You need metrics that prove your system is safely scaling, not just scaling.

Track input metrics to monitor process health. Start with Factual Error Rate: the percentage of AI drafts flagged for inaccuracies during human review, before publication. Research shows that tracking factual error rate alongside accuracy is table stakes for AI governance. Aim to trend this downward as your prompts and fact-packs improve.

Next, measure Human Edit Time Ratio—the percentage of time your editors spend rewriting versus light polishing. If AI is working, you should see a 30-50% reduction in edit time, not zero. Zero means you're publishing unvetted drafts. Fifty percent means AI is doing the heavy lifting while humans add expertise and judgment.

Finally, log Sensitive Data Flag Rate using tools like Reco, which detects when 10% of enterprise prompts contain sensitive corporate data. A rising flag rate signals training gaps or workflow leaks.

Measure output metrics to prove business impact. Track organic traffic, keyword rankings, and time-on-page for AI-assisted content versus human-only baselines. 97% of companies edit AI content before publication, and those edits should translate into engagement gains.

Create an EEAT Signal Score: a simple yes/no checklist for each published piece. Author bio present? First-hand experience cited? External sources linked? Original data included? Score each article and track the average. Google rewards these signals. Your metrics should too.

Build an audit trail for every piece. Log the prompt used, the model version, the reviewer's name, and any factual corrections made.

Auditability and continuous monitoring verify compliance with ethical guidelines and give you a dataset to refine prompts over time.

When leadership asks if AI is working, show them error rates trending down, edit time dropping, and organic traffic climbing. That's how you prove governance enables scale instead of throttling it.

Your 30-Day Safe AI Implementation Plan

You've built the framework. Now comes the hard part: rolling it out without turning your content calendar into a bottleneck.

Here's how to go from policy to production in four weeks.

Week 1: Assemble and align (5 business days)

Pull together your AI Content Safety Council: content lead, legal, security, SEO. Book a 90-minute kickoff. Your agenda is simple: agree on who owns what, walk through the workflow blueprint from Part 3, and assign someone to draft the one-page AI Content Policy. That policy needs to answer four questions. Which tools are approved? What data stays out of prompts? Who reviews what? How do we log outputs? Get the draft circulated by Friday and collect feedback over the weekend.

Week 2: Finalize and communicate (5 business days)

Lock the policy down. Share it with your full content team in a 30-minute all-hands. Walk through the workflow diagram, demo the prompt template from Part 4, explain the factual verification checklist. Answer questions. Make it crystal clear this is about enablement, not adding red tape. Post everything in your team wiki and Slack channel so people can reference it later.

Week 3: Run the pilot (5 business days)

Pick one low-stakes asset. A how-to blog post works well, or a product feature explainer. Run it through the complete governed workflow: fact-first prompt, AI draft, expert review, EEAT layering, final approval. Track your Edit Time Ratio and log every factual claim that needed correction.

Document what felt smooth and what felt like friction.

Week 4: Review and scale (5 business days)

Sit down with your council and analyze the pilot metrics. Did the workflow actually reduce review cycles, or did it add steps that slowed things down? Refine your prompt template and approval process based on what you learned. Then announce the phased rollout: two more writers adopt the workflow next week, the rest of the team the week after.

Ongoing: Quarterly governance reviews

Every 90 days, reconvene the council. Review your tooling stack, update the policy for new risks, assess whether your KPIs are actually improving.

Governance isn't a launch. It's a rhythm you maintain.

Conclusion: Scaling Content with Confidence, Not Fear

You came here worried about hallucinations, data leaks, and search penalties.

Now you've got something most content teams don't: a system that lets you scale AI-assisted content without gambling your credibility. The best AI SEO tools aren't the ones with the longest feature lists or the slickest demos. They're the ones that slot securely into your governed workflow, where prompts are structured, outputs are verified, and every published piece carries a human signature.

Your competitors are stuck choosing between two bad options: ignore AI and fall behind on volume, or adopt it recklessly and risk everything they've spent years building. You're taking the third path. AI drafts faster than any writer on your team, but it never publishes alone. Your council owns the risks. Your KPIs prove the system works.

Start with the 30-day plan. You don't need perfection on day one. You need a repeatable process that your team trusts and leadership can defend. That's how you turn a mandate into a competitive advantage. Safely.

FAQ: Navigating Common AI Content Concerns

Does Google penalize AI-generated content?

No. Google explicitly states it rewards helpful, original content regardless of how it's produced. The risk isn't a penalty. It's invisibility.

Content that lacks experience, expertise, authoritativeness, and trustworthiness (E-E-A-T) won't rank, whether AI wrote it or not. Our playbook builds those signals into your workflow from the start, so you're not guessing what Google wants to see.

What are the biggest risks of using AI for content?

Three stand out. First, data leakage: 10% of enterprise prompts contain sensitive corporate information. Second, factual hallucinations that damage credibility. Vague prompts yield error rates of 38.3%, which means lazy inputs create expensive cleanup down the line. Third, producing generic content that fails E-E-A-T checks and gets buried in search results.

How can I use AI for SEO without hurting rankings?

Follow the governed workflow. Use Fact-First Prompting (Part 2) to reduce hallucinations. Mandate human expert review to add genuine experience. Enhance outputs with author credentials and cited sources.

That's how you demonstrate E-E-A-T. Google can't tell if AI wrote the first draft, but it absolutely can tell when content lacks depth or real expertise.

Can I use free AI SEO tools for sensitive topics?

No. Free tiers rarely offer data protection agreements and often train models on your inputs.

For anything involving proprietary data or customer information, use a commercial tool with a signed Data Processing Agreement. The cost of a subscription is nothing compared to a data breach or competitive intelligence leak.

How much time does this save if we still need human review?

Teams see 30–50% reductions in time-to-first-draft. You're not eliminating review. You're shifting human effort from creation to strategic validation, which lets you scale output and quality simultaneously. Writers stop staring at blank pages and start editing from a solid foundation instead.

Conclusion: Scaling Content with Confidence, Not Fear

You started here worried about hallucinations, data leaks, and search penalties. Now you've got a framework that actually addresses all three.

The best AI SEO tools aren't the ones with the longest feature lists. They're the ones that fit inside a governed system where humans stay accountable, prompts are engineered for accuracy, and every output gets verified before it ships. That's it.

This isn't theoretical anymore. Over 76% of SaaS companies are already using AI in their products (Source: saas-capital.com), and 97% edit AI-generated content before publishing (Source: linkedin.com/schgrv). The teams winning right now aren't moving faster by skipping review. They're moving faster because they made review efficient and defensible.

Your mandate hasn't changed: scale content to meet growth targets. But scale without governance is a liability. Always has been.

Start this week. Pull your core team together, draft a one-page policy, and run a single pilot through the governed workflow. The confidence to scale doesn't come from waiting for a risk-free tool. It comes from controlled execution.

You don't need permission. You need a system that protects you when you move.

Frequently Asked Questions

Does Google penalize AI-generated content?

No. Google doesn't penalize content based on how it's created. Their algorithms evaluate quality, not origin [Source: developers.google.com].

The real risk is that AI-generated content often fails to demonstrate Experience, Expertise, Authoritativeness, and Trustworthiness (E-E-A-T), which does impact rankings. If your AI workflow includes human verification, clear authorship, and cited sources, you're meeting the same standards Google applies to any content. Same bar, different starting point.

What are the biggest risks of using AI for content?

Factual hallucinations top the list. Vague prompts yield error rates above 38% [Source: frontiersin.org], and most teams don't catch them until after publishing.

Data leakage is the second exposure. 10% of enterprise prompts contain sensitive corporate information [Source: reco.ai]. That's proprietary research, customer details, and competitive intelligence flowing into systems you don't control.

E-E-A-T failure comes third. Content that reads like a generic summary won't rank, even if it's technically accurate. Your governance framework from Part 1 exists specifically to mitigate these three risks before they become incidents.

How can I use AI for SEO without getting penalized by Google?

Build a governed workflow. Use structured prompts that reduce hallucinations, require human subject-matter experts to verify factual claims and add experience signals, and maintain clear authorship attribution.

Google rewards helpful, trustworthy content regardless of how the first draft was created [Source: developers.google.com]. The 97% of companies who edit and review AI output before publishing [Source: linkedin.com/schgrv] understand that AI accelerates drafting, but humans ensure credibility. You're not hiding the AI part. You're just making sure what ships actually works.

Can I use free AI SEO tools for sensitive topics?

Not safely.

Free tools typically lack enterprise-grade security controls, data processing agreements, and compliance certifications (SOC 2, GDPR, HIPAA). When you input proprietary research, customer data, or competitive intelligence into a free tool, you have no contractual protection against data retention, model training on your inputs, or third-party access. For regulated industries or confidential content, invest in vendor-reviewed platforms with documented security postures. Or use open-source models deployed on your own infrastructure.

How much time does this save if we need so much human review?

The time savings happen in the drafting phase. AI can generate a structured first draft in minutes instead of hours, letting your team focus effort on high-value work: fact-checking, adding proprietary insights, optimizing for E-E-A-T, and strategic editing.

Teams report 40–60% reductions in time-to-first-draft even with mandatory review gates. You're not replacing human judgment. You're reallocating it from blank-page syndrome to quality assurance and differentiation, which is exactly where experienced content managers add the most value. The bottleneck was never the review. It was staring at an empty document for three hours.