February 10th, 2026

Building Proprietary Data Assets That AI Search Engines Will Cite

WD

WDWarren Day

You've noticed your organic traffic plateauing, despite doing everything 'right' with your blog. You search for "best AI SEO tools," hoping for a silver bullet—some software that'll crack the code on Google's AI Overviews and keep your pipeline full. But what if the most powerful tool isn't something you buy? It's something you build.

Here's what's actually happening: 86.8% of commercial search terms now display AI-generated elements, and only 4.5% of URLs cited in those answers match traditional Page 1 organic results. The game has fundamentally changed. Your competitors aren't just other websites anymore—they're whoever AI engines decide to cite as authoritative sources.

Most B2B SaaS marketers respond by adding another subscription to the stack. Another keyword tracker. Another content optimizer. Another AI writing assistant. They're buying tools to play the old game faster, while the new game rewards something completely different.

Look, in the age of AI Overviews and Search Generative Experience, competitive advantage has shifted from ranking #1 to being the indispensable, cited source. This requires moving beyond content and keywords to strategically building proprietary data assets—structured, permanent, and licensed for AI consumption.

This isn't theoretical.

You'll learn the exact 4-step technical framework for packaging data so AI systems are compelled to cite it, the 10-20-70 investment rule that separates winners from tool-collectors, and how to measure what actually matters when citations replace clicks. Your AI SEO optimization strategy starts with rethinking what you're optimizing in the first place.

The AI Citation Shift: Why Your Old SEO Playbook Is Obsolete

Your ranking strategy just became a citation strategy.

86.8% of commercial search terms now display a Search Generative element. When someone searches for "best project management software" or "B2B SaaS pricing models," they're not scrolling past ten blue links anymore. They're reading an AI-synthesized answer at the top of the page, complete with embedded sources and follow-up prompts.

Here's the number that should recalibrate your entire AI SEO optimization approach: only 4.5% of URLs generated by SGE directly match a Page 1 organic URL.

You can rank #3 for your target keyword and still be invisible in the answer that 77.8% of searchers actually see.

The game isn't "get to position one" anymore. It's "become the source the AI quotes."

Google AI does cite sources. SGE displays source carousels with clickable attribution links. So do Perplexity, Bing Copilot, and ChatGPT's search mode (though ChatGPT itself is a chatbot, not a search engine - the platforms that matter are the ones indexing and retrieving live web data). But these systems don't cite based on keyword density or backlink count alone. They cite based on data structure, attribution clarity, and machine-readable provenance signals.

Your old playbook optimized for crawlers that ranked pages. The new playbook optimizes for retrieval systems that extract, synthesize, and attribute facts. If your content isn't packaged as a citable asset - with structured metadata, explicit licensing, and persistent identifiers - you're furnishing the research while your competitors get the citation.

The shift isn't coming. It's already here, and the gap between cited and ignored is widening every quarter.

The 10-20-70 Rule: Your New AI SEO Investment Thesis

Most B2B SaaS companies are burning budget backwards. They dump 80% into tools and content mills, toss 20% at strategy, then can't figure out why Perplexity never mentions them.

Flip it.

The 10-20-70 rule (borrowed from Google's innovation framework and rebuilt for AI citations) gives you a resource split that actually matches how these systems pick sources:

10% on algorithm tracking. Watch what's shifting in Google SGE, Perplexity, ChatGPT citations. Set alerts for when you drop out. This is surveillance work. Keep it lean.

20% on enabling tools. Schema generators, DOI registration, version control, citation monitors. Force multipliers, not magic bullets. You need them. They're also commodities anyone can buy.

70% on proprietary data assets. Original research. Industry benchmarks. Structured datasets. Expert analysis that didn't exist before you published it. This is where you build something competitors can't knock off by subscribing to the same SaaS tool you use.

Here's why the split matters: AI engines don't cite tools. They cite unique, verifiable information packaged so their retrieval systems can trust it. When Perplexity answers "What's the average churn rate for B2B SaaS?" it's not pulling from your blog post about reducing churn. It's citing whoever published a structured dataset of 500 companies with clean methodology, version control, and a DOI.

Your competitors are still grinding out volume. Generic posts targeting keywords that matter less every quarter. You're going to build one canonical asset that gets cited hundreds of times across dozens of queries.

That's the bet. What follows is how you actually do it.

The Anatomy of an AI-Citable Data Asset

A proprietary data asset isn't a white paper. It's not a case study. It's structured, unique data that only you can publish because you're the one who collected it.

Think: anonymized product usage benchmarks across your customer base. Survey results from 500 industry professionals you polled. API performance metrics aggregated from your platform. Pricing trend analysis derived from your transaction data. These are datasets that answer questions no blog post can touch.

The difference matters. A blog titled "5 CRM Trends for 2025" is content—it synthesizes existing knowledge. A published dataset titled "CRM Adoption Rates by Company Size, Q1 2025" with 1,200 anonymized data points is an asset. One competes for attention. The other becomes the source that AI engines cite when someone asks, "What percentage of Series A companies use a CRM?"

Here's the reframe: You're not trying to "use AI with proprietary data." You're structuring your data so AI has no choice but to cite you when it answers questions in your domain.

Stripe publishes economic indices tracking global commerce trends. HubSpot releases annual marketing benchmarks with breakdowns by industry and company size. IBM Research assigns DOIs to technical papers and datasets. These aren't marketing plays disguised as research—they're data contributions that happen to cement brand authority every time an AI references them.

For your company, this might look like:

Usage benchmarks: "Average time-to-value by customer segment" or "Feature adoption curves across your user base."

Industry surveys: Original research on buying behavior, tool stack composition, or budget allocation in your vertical.

Performance data: Aggregated metrics showing how your category performs (load times, conversion rates, integration success rates).

The asset must be specific, versioned, and impossible to replicate without your access. Generic insights won't earn citations. Unique data will.

Step 1: Package Your Data with AI-Readable Metadata

Your dataset is invisible until you tell AI crawlers what it is.

Search engines and LLMs don't intuitively understand that your "2024 SaaS Pricing Benchmark Report" is a structured dataset. You need explicit markup—a machine-readable label that says "this is data, here's what it measures, here's who made it, and here's how to cite it."

That label is schema.org/Dataset. It's the vocabulary AI systems actually use to identify and parse data assets. Without it, your proprietary research looks like just another blog post to a crawler.

The Required Properties

Implement Dataset schema using JSON-LD (JavaScript Object Notation for Linked Data) embedded in your page's <head> or <body>.

The bare minimum you need:

- name: The title of your dataset

- description: A concise summary (150-250 characters)

- url: The canonical URL where the dataset lives

- creator: Your organization or author, structured as an entity

- datePublished: Original release date

- dateModified: Last update timestamp

- license: A machine-readable license URL (e.g., Creative Commons)

- identifier: A persistent ID—ideally a DOI, which we'll cover in Step 2

Enriching Context with Advanced Properties

If you want to stand out in retrieval-augmented generation (RAG) pipelines, add these:

- variableMeasured: The specific metrics your dataset tracks (e.g., "average contract value," "churn rate")

- temporalCoverage: The time period covered (e.g., "2024-01-01/2024-12-31")

- spatialCoverage: Geographic scope if relevant (e.g., "North America")

Here's a working example for a fictional SaaS benchmark:

{

"@context": "https://schema.org",

"@type": "Dataset",

"name": "2024 B2B SaaS Pricing Benchmark Report",

"description": "Pricing data from 347 seed to Series B SaaS companies, covering contract values, discounting, and packaging models.",

"url": "https://yourcompany.com/data/saas-pricing-2024",

"creator": {

"@type": "Organization",

"name": "YourCompany",

"url": "https://yourcompany.com"

},

"datePublished": "2024-03-15",

"dateModified": "2024-11-20",

"version": "1.2",

"license": "https://creativecommons.org/licenses/by/4.0/",

"identifier": "https://doi.org/10.5555/example",

"variableMeasured": ["average contract value", "discount rate", "pricing tier count"],

"temporalCoverage": "2024-01-01/2024-12-31",

"spatialCoverage": "North America"

}

Validation and Deployment

Use Google's Rich Results Test or Merkle's Schema Markup Generator to validate your JSON-LD before publishing. Syntax errors break parsing entirely. AI crawlers won't guess what you meant—they'll just skip your dataset.

Once live, this markup transforms your data from a static page into a citable, discoverable entity that LLMs can reference with confidence.

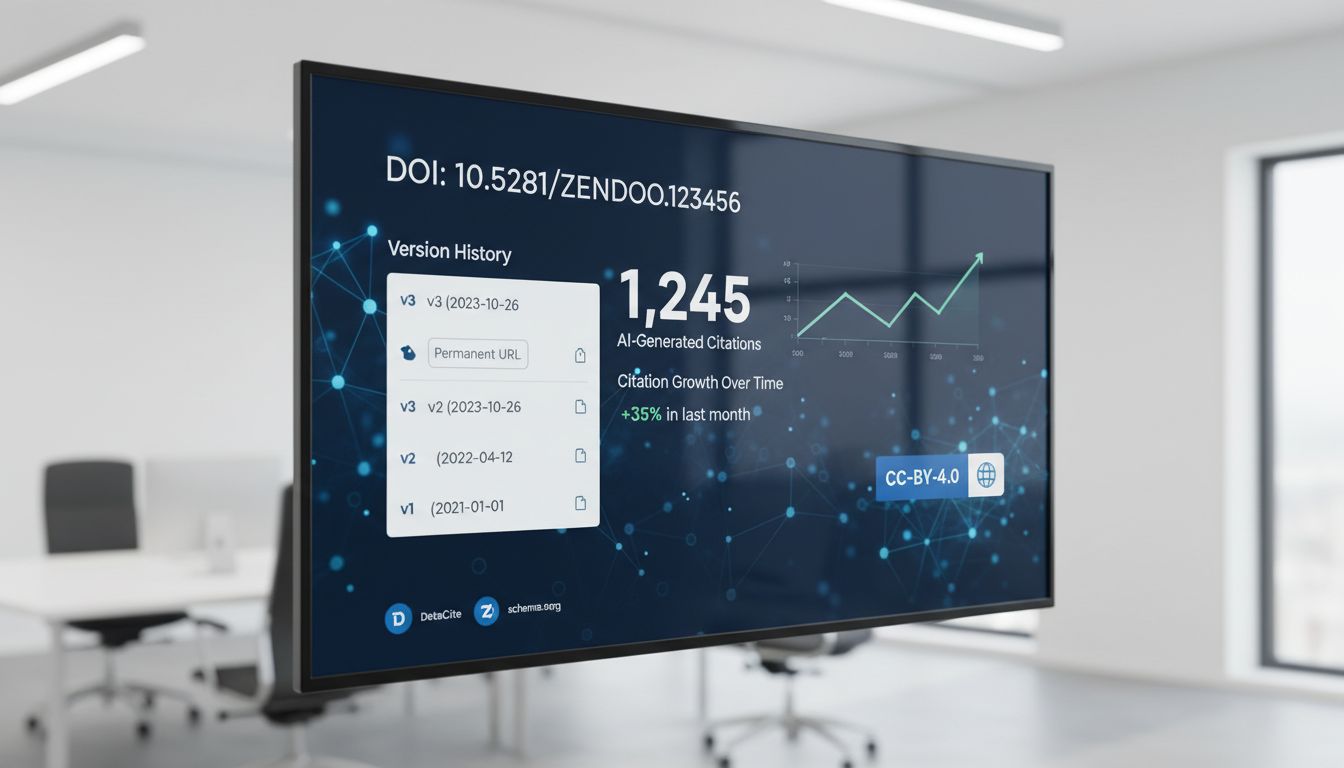

Step 2: Get a Permanent Digital Object Identifier (DOI)

A Digital Object Identifier (DOI) is the academic world's answer to link rot. AI engines treat it like a trust badge.

Think of a DOI as a permanent forwarding address for your dataset. Even if you move servers, rebrand, or restructure your site, the DOI resolves to the current location. Academic journals have used this system for decades to keep citations valid. LLMs are borrowing the same logic: if a source has a DOI, it's stable enough to cite.

AI retrieval systems prioritize sources that won't disappear tomorrow. A blog post from 2019 might 404 next week. A dataset with a DOI registered through DataCite carries a guarantee of persistence. That permanence signals authority. Authority drives citation selection in retrieval-augmented generation pipelines.

The registration process is simpler than you think. DataCite and Crossref are the two main DOI registrars. DataCite specializes in datasets and research outputs. Crossref focuses on scholarly publications. For most B2B SaaS companies publishing proprietary data, DataCite is the right fit.

You'll need a DataCite member account—either direct (if you're a research institution) or through a repository partner like Zenodo or Figshare. Costs vary. Academic institutions often pay annual membership fees around $500–$1,500. Commercial entities can access DOI minting through repository services at no cost per DOI.

Once registered, you deposit metadata: title, creator names, publisher (your company), publication year, resource type (Dataset), version number, and rights statement. This metadata becomes the permanent citation record. Then you mint the DOI—a unique alphanumeric string like 10.5281/zenodo.123456. Embed it in your dataset page's schema markup and human-readable citation block.

NASA Earthdata sets the gold standard here. Every dataset they publish includes a DOI, structured metadata, and clear citation guidance. AI engines cite NASA data constantly because the infrastructure guarantees reliability.

For a SaaS founder, getting a DOI is like registering your business entity. A one-time administrative step that establishes long-term credibility. It costs less than a month of your SEO tool stack—and unlike those tools, it makes your data the asset AI can't ignore.

Step 3: Implement a Versioning & Archiving Strategy

Here's the problem nobody warns you about: the moment you update your dataset, you risk breaking every AI citation that pointed to the original version.

An LLM that cited "Q4 2024 SaaS Pricing Benchmarks" now references data that no longer exists at that URL. The citation breaks. Your authority score takes a hit. The AI engine learns not to trust your URLs.

You need immutable versioning. The same discipline you apply to your API releases.

Structure your dataset URLs like semantic versioning: /saas-pricing-benchmarks/v1/, /saas-pricing-benchmarks/v2/, /saas-pricing-benchmarks/v3/. Each version lives at a permanent, unchanging URL. When you publish new data, create a new version endpoint rather than overwriting the old one. Simple in concept. Harder in practice because it feels wasteful to maintain old data nobody's actively using—except AI engines are using it, constantly, to validate their citation chains.

In your schema.org markup, declare the version explicitly using schema:version and schema:dateModified. If v2 builds on v1, add schema:isBasedOn pointing to the previous version's DOI. This creates a citation chain that AI engines can follow, understanding the provenance and evolution of your data.

Archive every version in a public repository. GitHub works for raw CSVs and JSON. Zenodo handles larger datasets with their own DOIs. This serves two purposes: it keeps old citations alive indefinitely, and it signals to AI systems that you maintain rigorous data governance. Broken links are the fastest way to lose citation authority.

Keep a public changelog at /saas-pricing-benchmarks/changelog/ that documents what changed between versions. AI systems increasingly parse these to understand data reliability and methodology consistency.

One common question: "How do I use AI to build a database for this?" You're asking the wrong question. You're not using AI to build the database—you're building a version-controlled, structured database for AI to cite. The discipline comes from you, not the tool.

Think of it this way: your data asset is now infrastructure. You wouldn't deploy code without version control. Don't publish data without it either.

Step 4: Declare Clear, Machine-Readable Licensing

AI systems operate in a legal gray zone. Without an explicit license, LLMs treat your data as potentially off-limits, even if you want them to cite it.

The outcome is absurd: the dataset you worked hardest to build sits unused because you forgot to add three lines of code.

Choose a permissive, attribution-required license. Creative Commons Attribution 4.0 (CC-BY-4.0) is the gold standard for content and datasets. If you're publishing raw data files like CSVs or JSON, consider Open Data Commons Attribution License (ODC-BY), which is purpose-built for databases. Both require users (including AI crawlers) to credit you. That's exactly the behavior you want to encourage.

You need two layers.

First, add the license URL to your schema.org markup:

"license": "https://creativecommons.org/licenses/by/4.0/"

Second, host a human-readable LICENSE.md file in the same directory as your dataset. Link to it from your dataset landing page. This dual approach satisfies both machine parsers and human auditors who might review your data governance.

Reputable AI search platforms like Google AI Overviews, Perplexity, and Microsoft Copilot are increasingly designed to respect usage rights and provide attribution. A missing or ambiguous license introduces legal friction. Their retrieval pipelines may skip your data entirely, not because it lacks quality, but because it lacks permission.

Look, you're not giving away control here. You're removing the excuse AI engines have for ignoring you. Licensing is what turns proprietary data into a cited, traffic-driving asset instead of a legal liability their systems avoid.

The Best AI SEO Tools Are Enablers, Not Stars

You searched for "best AI SEO tools" because you wanted a solution. Here's the thing: the most effective AI SEO optimization tools aren't the ones promising to write your content or automate your rankings.

They're the ones that help you build, package, and monitor the proprietary data asset you now understand you need.

Stop shopping for stars (tools that claim to "do AI SEO for you"). Start investing in enablers that let you create citation-worthy assets and measure whether AI engines actually use them.

AI SEO Tools: The Enabler Stack

| Tool Category | Purpose (For Your Data Asset) | Examples (Free/Paid) |

|---|---|---|

| Data Packaging | Generating JSON-LD schema markup for datasets | Merkle's Schema Markup Generator (free), Google's Structured Data Markup Helper (free) |

| Persistent Identification | Minting DOIs for stable, citable URLs | DataCite, Crossref |

| Monitoring & Analytics | Tracking AI citations and visibility | Bing AI Performance Report (free), Otterly.ai, Ahrefs AI Features, SerpAPI |

| Provenance & Structure | Adding data lineage and transparency signals | W3C PROV Libraries (free) |

| General SEO Audit | Ensuring foundational site health | Google Search Console (free), Ahrefs, Screaming Frog |

Analysis of the Table

Notice what's missing? Content spinners. Keyword stuffing automation. "AI blog generators."

The majority of free AI SEO tools worth using exist to help you build infrastructure, not churn out mediocre posts. Google Search Console, Bing's AI Performance Report, open-source JSON-LD generators are all about giving you the scaffolding you need. Even paid tools like DataCite or Otterly.ai are about enabling your asset, not replacing your strategy.

This stack has compounding returns.

A schema generator is a one-time setup cost. A DOI is permanent. Citation monitoring compounds as your dataset gets referenced more widely. You're not renting visibility. You're building an owned distribution channel that strengthens every time an LLM cites you.

The best AI SEO optimization tools are boring. They're the scaffolding, not the building.

From Citation to Conversion: Measuring What Actually Matters

Citations without conversions are just expensive vanity metrics.

Your CFO doesn't care that Perplexity mentioned your brand seventeen times last month. They care whether those mentions drove pipeline. The shift from ranking to citation demands an equally fundamental shift in how you measure success.

Stop tracking "AI visibility" as if it's an end goal. Start tracking it as a top-of-funnel signal that either converts or doesn't.

The three-layer attribution framework

First, discover where you're being cited. Use tools like Otterly.ai, Bing's AI Performance Report, or SerpAPI to monitor which pages appear in AI-generated answers. Set up weekly alerts for citation drops. They're your early warning system.

Second, attribute that traffic correctly. AI referrals often show up as direct traffic or get lumped into generic "google / organic" buckets. Create specific UTM parameters: utm_source=google_ai, utm_source=perplexity, or utm_source=chatgpt. For medium, use utm_medium=ai_overview or utm_medium=ai_citation. Tag every dataset page, every DOI landing page, every piece of citable content with these parameters.

Third, track what converts. In Google Analytics 4, set up conversion events for the actions that matter to your SaaS business: demo requests, API key sign-ups, dataset downloads, pricing page visits. Microsoft found that AI-powered search delivers 76% higher high-intent conversion rates than traditional search, but only if you're measuring it.

The ultimate ROI metric isn't citation count. It's qualified demos per cited source.

If your proprietary salary benchmarking dataset gets cited in ChatGPT and drives twelve enterprise demo requests, that's a business outcome. If it gets cited and drives zero, you've built the wrong asset. The data will tell you which assets are worth expanding and which are just generating empty mentions.

Build the measurement infrastructure before you scale the content. Otherwise you're optimizing blind.

Common Pitfalls: The 5 Biggest 'AI SEO' Fails to Avoid

You can build the perfect data asset and still get zero AI citations.

Here are the five mistakes that kill citation potential before you even start. Most are embarrassingly easy to fix, which makes them even more frustrating when they tank your visibility.

1. Blocking AI Crawlers in robots.txt

Your legal team panics about AI scraping. They block GPTBot, BingBot, and everything else in robots.txt. Problem solved, right?

Wrong. You just went invisible to every AI system that could've cited your work. Zero citations, zero visibility, zero upside from all that structured data work you did.

The fix: Pull up your robots.txt right now. Explicitly allow AI crawlers for anything public-facing. Block only the genuinely proprietary stuff behind paywalls or internal tools. This isn't complicated, but it requires actually checking the file instead of setting a blanket "block everything" policy.

2. Publishing Static, Unversioned Datasets

You ship "The 2024 SaaS Benchmarks Report" and move on to the next project.

Six months later, AI engines are citing outdated numbers because you never versioned properly. Your credibility takes the hit, not theirs. They just sourced what you published.

The fix: Use immutable versioned URLs from day one. /v1/, /v2/, whatever structure works. Keep a public changelog so people (and AI systems) can see what changed. Archive old versions on GitHub or Zenodo. Citations stay valid, your reputation stays intact.

3. Omitting Machine-Readable Licensing

No explicit license? AI systems assume legal risk and skip your content entirely. Doesn't matter how good your data is.

Ambiguity kills citations faster than bad data.

The fix: Add a schema.org license property to your JSON-LD markup. CC-BY-4.0 and ODC-BY are both solid choices depending on your use case. Drop a human-readable LICENSE.txt file in your repository too. Make it obvious what people can and can't do with your work.

4. Relying on Unstructured Content Layout

Walls of text don't parse well. Vague headings don't help. Missing semantic HTML actively hurts you.

AI models favor clear heading hierarchies, bullet lists, and answer-focused blocks. Dense paragraphs with no structure? They'll skip past your content to find something easier to extract.

The fix: Restructure with strict H1 → H2 → H3 hierarchy. Keep paragraphs short, two to four sentences max. Add FAQ sections that map directly to common queries. The more parseable your layout, the more likely you get cited.

5. Flying Blind Without AI Monitoring

You publish, you optimize, you hope. Meanwhile you have no idea if you're appearing in Google AI Overviews, Perplexity, or ChatGPT. Visibility could've dropped three months ago and you'd never know.

The fix: Set up monitoring with Bing's AI Performance Report, Otterly.ai, or SerpAPI. Track citation frequency, not just traditional rankings. Build alerts for sudden drops so you can actually respond when something breaks.

Each of these mistakes negates the technical infrastructure you've already built. Avoid them, and your proprietary data becomes the competitive moat AI search was designed to reward.

Conclusion

You searched for best AI SEO tools because something felt off about the old playbook. You were right. The shift from ranking to citation isn't a passing trend. It's a structural change in how AI engines surface and reward content.

The 10-20-70 rule gives you the blueprint: 10% on monitoring tools, 20% on content optimization, 70% on building proprietary data assets. Those four technical steps (package with schema markup, secure a DOI, version rigorously, declare machine-readable licensing) aren't nice-to-haves. They're the infrastructure that makes your data indispensable to LLMs.

Your first asset doesn't need to be huge. Audit what you already have. Customer surveys, product usage stats, support ticket trends. Package one insight this quarter using the framework above. Add the metadata. Register the DOI. Declare the license.

That single, structured dataset becomes your first real AI SEO optimization tool.

Stop buying subscriptions. Start building assets. AI engines cite what they can't ignore, and they can't ignore properly packaged, proprietary truth.

Frequently Asked Questions

How to cite an AI search engine?

When you're citing output from an AI like ChatGPT or Google SGE in your own research or content, treat it like personal communication. Include the prompt, model name, date, and platform in your citation. Something like: (ChatGPT, GPT-4, March 15, 2024, OpenAI).

This is completely different from the goal of being cited by an AI, which is what this article actually addresses.

How to APA cite ChatGPT?

The current APA format is: OpenAI. (Year). ChatGPT (Month Day version) [Large language model]. https://chat.openai.com [Source: APA Style Blog].

For example: OpenAI. (2024). ChatGPT (March 14 version) [Large language model]. https://chat.openai.com. Check the latest APA Style blog for updates since these guidelines are still evolving alongside the technology.

What is the most cited source in ChatGPT?

There isn't a single "most cited" source. It varies by query and topic.

Research from platforms like TryProfound shows that domains with strong E-E-A-T signals, structured data, and clear licensing appear most frequently in AI citations. Wikipedia, major news outlets, academic repositories like PubMed, government sites. Your goal isn't to beat Wikipedia. It's to build the same technical trust signals so your proprietary data joins that trusted cohort.

What country is #1 in AI?

According to the Stanford AI Index and Tortoise Media's Global AI Index, the United States and China lead in different categories. The U.S. dominates research output and foundational models. China leads in implementation and patents [Source: Stanford AI Index, 2024]. The EU, UK, Canada, and Israel are also significant players with specialized strengths.

The competitive landscape is fluid, with billions in investment flowing across multiple regions. "Number one" depends entirely on which metric you prioritize.

How to build an AI-based search engine?

This article focuses on being cited by existing AI search engines, not building your own.

Constructing an AI search engine requires deep expertise in machine learning, natural language processing, retrieval-augmented generation (RAG), and significant infrastructure investment. Well beyond the scope of an SEO strategy guide. If you're a B2B SaaS company, your leverage comes from making your data indispensable to the engines that already exist, not competing with Google or OpenAI directly.